The Nick Bostrom Scandal Differentiates Effective Altruism From Rationalism

San Francisco mentalities vs. the cool, cold realms of math-mindedness

This is a guest post by

. You should subscribe to his Substack:I am sometimes criticized for being imprecise, and one reason why is my inability to differentiate between Effective Altruists (EA) and Rationalists when I’m writing fast. This is a mostly valid criticism; I get in the flow and treat them as one-and-the-same when they aren’t, and people in both groups are right to be peeved at me.

Part of why that happens is I’m not either thing - I rub shoulders with both groups often, but I’m really part of a relatively small third group of people who bridge the gap between normal people (like your mom, if she’s normal) and small, fringe intellectual groups and movements. The downside of being not quite in either world is not being as intimate with the details of any given movement as its members, while the upside is being able to talk about the groups to other outsiders who don’t want to spend days learning specialized language.

This article falls into “explainer” territory - it’s aimed at the more normal side of my audience. The article’s likely going to probably be wrong on a lot of very granular, detailed nuances, which means that people from those groups will probably be (rightfully!) pissed at me.

If you are outside those groups, you should know that I’m probably wrong in those little granular ways (and thus you should take my account with a little, granular unit of salt). It’s likely right in all the ways you care about -- the big picture stuff -- but you should take people in the comments seriously if they claim I’m wrong about some minor detail.

Rationalists

Rationalism is supposed to be - in a stripped-down technical sense, at least - a way of approaching thinking and belief. They tend to represent themselves as approaching both things in an emotionless, stripped-down way driven by data, reason, and provable fact.

If you told a rationalist that, say, gay adoption is good or bad, he (or, more rarely, she) is supposed to ask you for your evidence on that, then dispassionately weigh it in the more robotic parts of his brain. Modern rationalists actually have full-blown formulas (See here) for certain kinds of thinking and situations, mostly having to do with how they determine what they believe or how they make decisions.

In the social/imprecise sense of “what kind of dude am I dealing with if he identifies himself as a rationalist”, you are looking at someone who is significantly more likely than average to be utilitarian, to be mathy/nerdy/programmery, and to be autistic (or something similar). On average, it’s very important to them to be perceived as smart; if you write an article and a guy comes in and explains that had he written it he would have used bigger, less readily understood words, that’s a rationalist.

Importantly for this article, rationalists are much more likely than the general public to make an argument that is bonkers on its face, and only seems kind of reasonable if you build your argument top-to-bottom out of moving from logical conclusion to logical conclusion without much thought for things that seem intuitively moral.

This last is independently important for this conversation in a way that other rationalist characteristics aren’t. You could spend an entire lifetime at knitting-centric social groups and never hear an argument that children of some advanced age (say, 1-25 years old, with 25 being an outlier I’ve only seen a couple times) don’t have fully developed human brains, aren’t fully human as a consequence, and thus should be “abortable”. The same isn’t true for rationalist circles; it’s not exactly a common argument there, but if you stick around long enough you will eventually hear it made by a minimum of at least one early-20’s intellectual edgelord.

How that argument is received is, if anything, even more atypical. A standard-issue rationalist is supposed to hear that sort of argument and calmly assess the rationale behind it - i.e. to acknowledge that emotions tend to get in the way of making good decisions, and to weigh all the benefits being presented against all the potential costs before knee-jerking to a “no, we just don’t do things like that” conclusion.

Editor Nick sent me a little gem called the Litany of Tarski, which was apparently a favorite of rationalist dinosaur Eliezer Yudkosky in the pre-EA era:

If the box contains a diamond, I desire to believe that the box contains a diamond; If the box does not contain a diamond, I desire to believe that the box does not contain a diamond; Let me not become attached to beliefs I may not want.

If you get one TLDR takeaway from this section, let it be this: Truth-seeking and view-justifying are not just baked into the DNA of rationalism - they are supposed to be the point of the whole thing. Shifting away from those values would mean a fundamental shift to a different system entirely.

EAs

EAs are a LOT like rationalists; they sprang out of the same type of dude. But as pointed out whenever I conflate them, they aren’t precisely the same thing. The first and biggest difference is that the EA label doesn’t, at it’s base, describe a way of thinking so much as it does a goal built from a particular way of thinking.

EAs are still overwhelmingly utilitarian; they are also still very interested in being considered smart. At least at some point, most EAs were rationalists - one movement sprang from personnel acquired from the other. But they’ve since diverged; you don’t have to be a rationalist to be an EA.

In the last section, I painted a picture of a sort of pale-skinned-indoor-kid who doesn’t read social situations all that well but loves numbers. While acknowledging this isn’t all rationalists, the reason why it’s a LOT of them is because there’s exactly one pre-req to wanting to be a rationalist. You need a desire to think in a better way and be generally “More right”, and that’s it; the most well-known rationalist website is unironically called LessWrong.

EAs tend to be the same kind of guys (or at least much closer to the same type of guy than someone from the general population), but with one huge difference - they are oriented towards a stated goal instead of a stated mindset. EAs like being seen as smart, analytical people who make good decisions, but their terminal value is actually being seen as smart, analytical people who are doing a lot of charity better than other people.

EA’s goal-orientation makes them subject to selection effects “pure” rationalists aren’t. While a pure rationalist can be a good member of his tribe just by thinking in a certain way, an EA is only a good EA to the extent they drive more and more charitable giving, amplified by how convincingly effective their usage of the resulting funds are. Barring effectiveness, they will also accept weird usages of the funds, as with the the patient philanthropy project’s promise to take your money and do nothing at all with it for an indeterminate amount of time:

For low-level EA-aligned folks, this just means giving away a certain amount of their income to sources that seem to make good charitable use of it. At a leadership level, this means being the kind of person people would give money to. This means that while EA rank-and-file might be very rationalisty indeed, an EA leader trends towards being the kind of person who could convince a conventional billionaire to drop several million dollars on water filter research (or something).

If you take one thing away from this section, let it be that today’s EA is different from a rationalist in that having a socially acceptable image is a real and present requirement for being a good member of their tribe.

The Overton Window

The Overton window is a concept describing the spectrum of things we find acceptable to discuss at any given time, originally intended to describe what ideas were and weren’t politically viable. As an example, you’d find it much easier to push some slightly expanded social programs than you would full-blown communism. That’s because communism, for most, is much closer to being outside the Overton window; it’s something that most salt-of-the-earth Americans don’t think of as a reasonable thing to suggest.

To put it another way: Nice people don’t discuss certain concepts in polite settings. Remember earlier when I mentioned that hypothetical about “aborting” young children? That’s something that came from Peter Singer, a sort of proto-EA rationalisty-type philosopher. If you recoiled from it instinctually, it’s probably because “let’s murder children” is outside of the overton window for most people; it’s not something most people want to or are capable of discussing in cool, logically-reasoned terms.

To rip something from Twitter:

The answer to this tweet’s question is something like “most people understand that sex offender registries are probably flawed in some significant ways, but saying we should be softer on sex offenders is considered outside Overton window, so nobody powerful does it”.

Rationalists have historically tended to be less concerned about the Overton window than most, even to the point of actively bucking it. To the extent rationalists encounter something like Singer’s morally permissible infanticide or arguments that sex offenders are mistreated, they are kind of supposed to take them seriously. To the extent they can’t, they are admitting they are less rationalist than the ideal.

EAs might want to do the same thing, but are, as mentioned above, bound to a different set of practical motivations; where a rationalist arguably becomes more rationalist just by being willing to entertain ideas outside the window, an EA has to consider that being seen to talk about unacceptable things might make them be perceived as a bad steward for charitable funds. This makes them not only more sensitive to the Overton window than a pure rationalist, but sometimes even more so than a normal, everyday person.

Enter Nick Bostrom

Nick Bostrom is a Swedish-born philosophy professor at the University of Oxford. His philosophy PhD is from the London School of Economics, and as such comes complete with the kind of gravitas that gives him access to more mainstream media exposure. He runs an EA-friendly institute reliant on donations to function that maintains a certain level of relevance in the greater TEDtalk and The Economist normie-sphere.

He is, in short, kind of a big deal in the EA world. Bostrom is also a great example of someone who bridges the gaps between Rationalist-types and EAs; he works in the most rationalisty and nerdy of all EA sectors (artificial intelligence risk), but also comes with sufficient flash to impress even the most legitimacy-seeking of EA guys.

And, importantly, he’s in big big trouble.

Bostrom has found his way into a pickle by way of (as is the case for so many of us, given enough time) the unearthing of a quarter-century-old usenet-style email chain.The emails you are about to read are from 1997, and for soon-to-be obvious reasons I’m going to do my best to relate the contents of the chain in as dry and factual a way as I can muster.

The context of the conversation appears to be Bostrom participating in an argument about conversation styles, specifically offensive conversational styles. In it, Bostrom is taking the position of defending offensive styles of speaking, so long as the styles are used to relay accurate information:

I have always liked the uncompromisingly objective way of thinking and speaking; the more counterintuitive and repugnant a formulation, the more it appeals to me given that it is logically correct.

Note the strong echoes of my description of rationalists. Bostrom is posturing as a champion of uncompromising objectivity; truth is truth, he indicates, and truth should be pursued. The best way for things to be correct, he posits, is for them to be logically correct. And the more something is hidden under repugnance - the more it’s an accurate statement to make that might otherwise be left in the cold, lonely world outside the Overton window - the happier he is to engage with it.

To talk about this effectively in the current year without noise drowning out the signal, Bostrom would need a relatively benign but relevant example to illustrate this concept. Preferably, this view would be something that would be controversial in an outlandish way nobody would suspect he actually ascribed to; see above where I used infanticide (which most of you don’t think I’m into) for that purpose. 26 years ago, though, Bostrom picked this:

Take for example the following sentence:

Blacks are more stupid than whites.

This having been said and then subsequently uncovered in 2023 is what scientists refer to as quite the whoopsie. But to drive in more coffin nails, Bostrom continued:

I like that sentence and think it is true. But recently I have begun to believe that I won’t have much success with most people if I speak like that. They would think that I were a “racist”: That I _disliked_ black people and thought that it is fair if blacks are treated badly. I don’t. It’s just that based on what I have read, I think it is probable that black people have a lower average IQ than mankind in general, and I think that IQ is highly correlated with what we normally mean by “smart” and stupid”. I may be wrong about the facts, but that is what the sentence means for me. For most people, however, the sentence seems to be synonymous with:

”I hate those bloody n_____!!!”

My point is that while speaking with the provocativness of unabashed objectivity would be appreciated by me and many other persons on this list, it may be a less effective strategy in communicating with some of the people “out there”. I think it is laudable if you accustom people to the offensiveness of truth, but be prepared that you may suffer some personal damage.

I am censoring “n_____” myself here; Bostrom typed and sent the whole word.

Bostrom’s views about discourse are reasonably in line with rationalist thinking at least, say, 10 years ago; he’s saying “There’s subjects that are outside the Overton window; we should be able to discuss those dispassionately. It may be that people will hear the worst possible version of them and punish us for it, but it doesn’t mean they are right and we are wrong by default”. But in doing so, he picked an example that was (then as well as now) actually outside the window, and now it’s coming back to bite him.

This is the first level of how Bostrom is screwed - the one where, right or wrong, he said an Overton-banned argument out in the open, and now people who wanted to take him down can, and are, clubbing him with it.

To be clear, not everyone is beating up on Bostrom for Overton-related reasons. There are people making identically worded criticisms of Bostrom BECAUSE they believe what he said is entirely disproved, and that to the extent it’s outside the Overton window it’s because it’s incorrect nonsense. But most people aren’t thinking about this that hard - they are working from a much more accessible positioning of “We have decided, as a polite society, that we don’t say this particular thing and wouldn’t even if it was correct.”

(There’s another on which Bostrom is being criticized just for using a hard N-word in a representative hypothetical quote. I’m not getting into that much here; “can I use the N-word in quotes” seems like a whole different type of article, and one I don’t want to write).

I think if you don’t know me that well, at this point you might assume that I’m just trying to count coup on Bostrom and perhaps rationalists. That’s not what’s happening - I don’t mind either party, and I certainly don’t have the kind of grudge I’d need to get joy out of pointing out that Bostrom ius getting dragged for making an uh-oh.

If you know me a little better, you might think I’m here to argue that 49-year-old men shouldn’t be dragged for stuff they said as 23-year-old men in an entirely different conversational climate. That’s a lot closer to the kind of thing I’m interested in (and I’d be glad to talk about it in the comments) but that’s not my focus here, either - It’s interesting, but not what the article is about.

What the article is about are the reactions to the Bostrom story in the vein of that first level of trouble - the one that disallows some kinds of talk not because it’s wrong on an object level, but because it’s deemed unacceptable to speak about at all. I’m concerned with how this is viewed by EAs and Rationalists respectively, and what that means for both groups.

Spoiler: For one group it means life and power, while for another it means death and irrelevance.

If you were trying to predict EA behavior in reaction to the Bostrom debacle based only off what I’ve told you, you’d probably come up with something like this:

Since EAs differ from rationalists in that they are more primarily interested in the goal of doing more of a certain kind of charity than they are being right about more things, they will probably drop Bostrom like the live coal he has become. They will universally disown him, lest his stink rub off on them and keep them from getting the normie-money they need to pursue their goals.

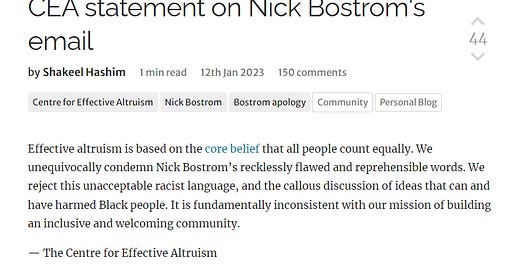

True to that line of thinking, this is about 100% of the discourse coming out of that zone. Here’s the (Sam Bankman-Fried-funded, and thus a bit more understandably jittery) Centre for Effective Altruism on the subject:

There isn’t any mention of Bostrom being wrong as such; you could believe Bostrom is right and still make this statement using the exact same wording without contradicting that belief. What it does mention is that these ideas are unacceptable - that whatever else they might be, they are outside of the Overton window and thus unacceptable for an EA to say.

As bad as I try to make that sound, understand that none of this is a litmus-test failure for EA membership. If anything, taking a truth-agnostic stance like this is a positive indicator that EAs can be financially viable in the long term - they’ve indicated in strong terms that they are willing to play ball, and being willing to play ball is what gets checks signed. Taking this stance means more money is given to EAs than otherwise, and that’s what an EA cares about.

For rationalists, the goals and field of play are different. A rationalist in the pure sense is largely someone who has declared that they will use weird, unconventional tactics if that’s what it takes to be right - that they will overcome the usual shortcomings of social desirability as a motive and bust through whatever walls and difficulties they need to to get closer to the truth of matters.

If a rationalist disagrees with Bostrom on a factual level, it’s not necessarily a litmus test failure in and of itself. There’s no rule against them examining evidence and coming to a disagreement, and there’s a fair amount of rationalists who come out on the “nurture” side of race IQ gap nature/nurture debates.

But if they do what the EAs have done - saying “Listen, he might be right or wrong, but you just don’t talk about that shit, so he’s wrong”, that turns the litmus strip bright red. In bowing to the dictates of the Overton window, they melt the entire framework their philosophy sits on.

I’m really, really interested to see what happens here.

I first encountered the rationalists in the early-to-mid-2010 era, and immediately liked them despite disagreeing with them on a large percentage of things. I liked them because, at that time, whatever corner of the internet you found them congregating was a place where people would argue, often forcefully, about what was actually true.

Even at that time, if someone had come in and said “Hey, we can’t talk about this; it’s morally wrong to examine these ideas” they would have been laughed out of the room. Again, and I can’t stress this enough: The entire point of the movement was to actively avoid the kind of thinking that made you come to conclusions built from anything but data and logic. The whole aim was to actively open up the type of discussions that “We can’t talk about this, it’s outside the window” usually closed off.

A few years ago, that started to change. At some point, places like r/slatestarcodex started actively purging all their wrong-thinkers; they adopted the philosophy of the Overton window and blocked off any topics that might be considered taboo by window’s standards. They’ve lightened up a bit in the past few years, but for a while the only topics you could talk about were those guaranteed to be inoffensive to anyone.

Today, that subreddit is a ghost town compared to what it once was. Anyone could have easily predicted they’d end up a shelf of what they once were so long as they understood that the “killer app” of rationality was that willingness to talk about things outside of the Overton window; getting rid of it was stripping off the entirety of their competitive advantage.

Incidents like the Bostrom controversy are great tests for how much of that competitive edge rationalists are willing to retain in the face of disapproval. Can they think he’s wrong without abandoning rationality? Sure. Can they think he’s racist? Of course. But to the extent they think he’s wrong or racist because all good people should as opposed to because the facts and data argue for it, they give up the philosophy and flavor of thinking that justified their existence as a movement in the first place.

Thanks for the guest spot, buddy - 10/10 would do again.

There's just too much to respond here, so this:

- the folks you describe as "rationalists" have been around forever. They are the true scientists (and heretics), while the rest of the population are much closer to sheep. There's a reason why you see, "To thine own self be true." carved all over universities.

- there are many Overton Windows. They vary depending on place and time, and are the subject of fierce battles. Such a battle is going on now, with something equivalent to a blitzkrieg having taken place about five years ago. The blitzkrieg has finally been noticed, and the recently installed Wokists are now being challenged. If humanity is lucky they will be soundly defeated.