Programming note: At the start of January, I announced a new publishing schedule where I would publish 1 article every weekend for paid subscribers only.

Today was supposed to be the next paywalled article, but since it is about academic scandals, I have decided to make it free for the public good.

I’m still going through a learning curve with this strategy, apparently. Today I learned not to make my weekend article about academic scandals. Next week I will return to paywalling the weekend article.

My article Four of the Latest Academic Scandals was relatively well received, so today I will write another in roughly the same format.

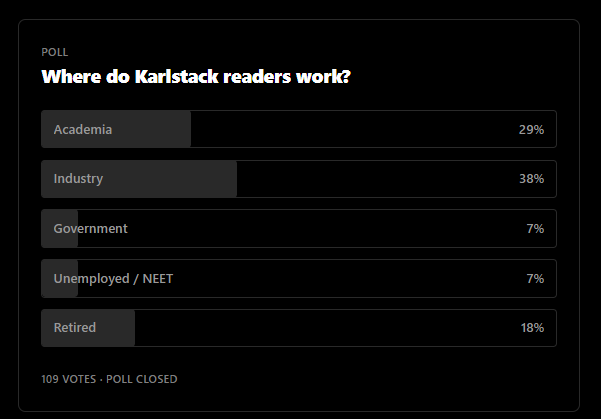

In that article I ran a poll that found 29% of my ~4,000 readers work in academia.

This readership composition makes sense, since the genesis of Karlstack was as a blog focused solely on academia prior to branching out into politics, academia, culture wars, crypto etc.

The biggest surprise in this poll is that 18% of readers are retired!

I guess the readership skews older than I imagined.

How old are you?

VOTE:

The 45-year olds in this poll will probably feel old being lumped in with 60-year olds, but the poll has a maximum of 5 options, so I constructed the brackets as best I can.

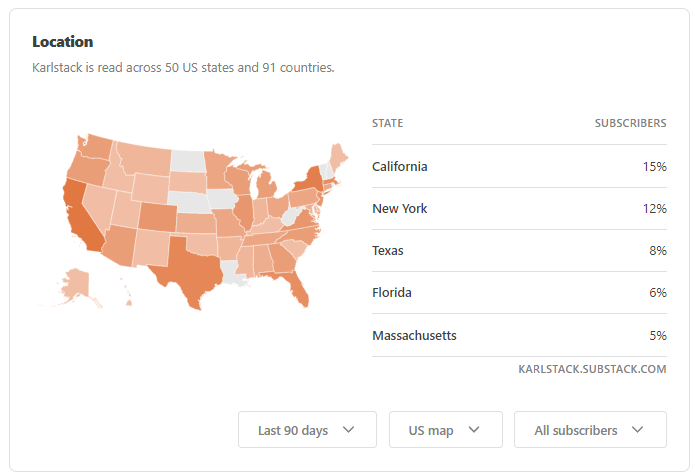

I *would* ask where you live, but as luck would have it, last week Substack added a new feature that breaks down readership by geographic area. These metrics indicate that Karlstack is read in 91 countries… mostly USA & Canada.

Shoutout to my 1 subscriber in Botswana!

Reminder that if you live in a 3rd-world country (or if you are a student), I will *heavily* discount a paid subscription. Email me chrisbrunet@protonmail.com and I will hook you up.

Substack also now breaks readers down by state. It makes sense that the 4 biggest states in terms of population (California, New York, Texas, Florida) are also the 4 most common Karlstack subscribers. It would be nice to drill one level of granularity further, and show these numbers in readership-per-capita format.

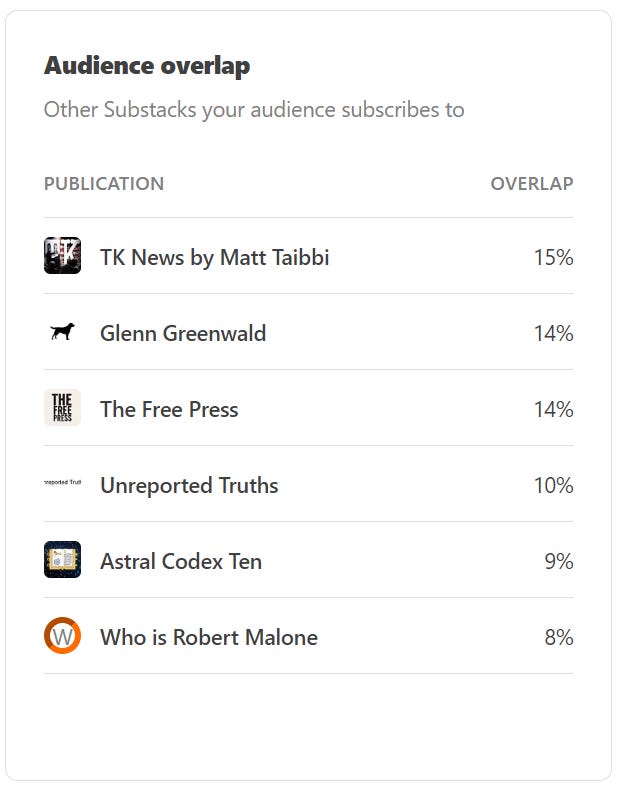

Substack now also breaks down by audience overlap. My readers also read

,,, , , and Robert W Malone MD.Scandal #1: Update on the Investigations into Enos’ Data Irregularities

Longtime readers of Karlstack will be familiar with my Quixotic battle against Harvard’s corrupt department of government. This update is about the corruption of AJPS and PNAS editors and research ethics managers, and other Harvard officials who have been aware of this whole situation for several months (I understand several people have contacted them) and should have started an investigation by now.

One academic recently submitted a complaint to Harvard officials, PNAS, AJPS, and Cambridge University Press (CUP). CUP responded, and here is the academic’s response to CUP:

Dear [Name],

Thank you for the follow-up.

In response to your questions regarding the overlap between Enos’ AJPS and PNAS articles and the CUP book: the AJPS and PNAS articles are reprinted in essentially the same form (with only minor, cosmetic changes) as two chapters in the CUP book.

More specifically, if you look at the book content at this link;

https://www.cambridge.org/core/books/abs/space-between-us/contents/6EFEE613395773E0E6CE7640462E8394

you will notice that:

* Chapter 5: “Boston: Trains, Immigrants, and the Arizona Question”

essentially duplicates the PNAS article, available here:

https://www.pnas.org/doi/10.1073/pnas.1317670111

* Chapter 6: “Chicago: Projects and a Shock to Social Geography”

is nearly identical to the APJS article:

https://onlinelibrary.wiley.com/doi/abs/10.1111/ajps.12156;

a freely accessible copy is at Enos’ website:

https://scholar.harvard.edu/files/renos/files/enoschicago.pdf

It is unclear whether Enos republished these articles as book chapters with or without permission from CUP and respective journals.

This means that all data irregularities in these articles, including the author potentially filling out his own surveys in PNAS (which the IPs he now deleted from the dataset and other issues like respondents aged 1-15 appear to indicate), mathematically impossible numbers, the deletion of nearly 900 observations that contradict Enos’ theory (precincts with high turnout and/or Republican vote in close proximity to demolition projects), the modification of dozens of official data values for turnout (changes by Enos range from roughly -100 to +500) and Republican vote (changes by Enos from -25% to +30%) etc. carry over into Chapters 5 and 6 of the CUP book. These irregularities, affecting thousands of datapoints, also implicitly affect other sections in Chapters 1, 2, and 3 of the book that build on and make references to Chapters 5 and 6. For comparison, Michael LaCour (who shared the same PhD advisor as Enos at UCLA) “only” fabricated 972 observations in his now retracted Science article.

Both journals have refused to investigate properly:

* PNAS: Ms. Yael Fitzpatrick, editorial ethics manager, informed me earlier this month that PNAS closed their investigation, with no further explanation. No new statement appears to be released from Enos (after the March 2022 statement in which he simply announced that he deleted the IPs but did not post any raw data or additional code), and no additional erratum (after the April 2019 one in which he claimed he accidentally typed the wrong question wording).

* AJPS: This has been a months-long saga. After initially flat out refusing to investigate, the editors finally launched an investigation into one narrow issue (some mathematically impossible numbers), at the request of a political science professor at Boise State University. Although Enos’ response was unsatisfactory, as he did not meet his burden of proof and did not post any additional data and code, the editors declared the investigation closed. You can find more details on the investigation in this series of articles by an independent investigative substack writer:

Please note that the hundreds of precincts that are either missing or have values different from official data have never been investigated by AJPS, nor addressed by Enos in public statements, Reddit AMAs, Twitter etc.

Further, even if we accept Enos’ explanation at face value (original survey data purchased from that company are unreliable and contain biases), it is still the case that his estimates changed across paper versions for inexplicable reasons. No matter how bad the original data quality was, those changes can only be the result of (undocumented) data manipulations performed by Enos. Changes involve increases in effect sizes in the direction of his hypotheses, and estimates that were not statistically significant (as confidence intervals overlapped zero) in previous versions of the paper becoming statistical significant out of the blue in the article version of the same paper.

Enos removed his dissertation (which contained the first version of the AJPS article) from ProQuest, and previous paper versions from his website (the latter could still be found using the Wayback Machine as of a few months ago).

After Enos’ response, the Boise State professor concluded that this is more likely fraud (rather than incompetence) on the part of Enos, and that the AJPS editors were being unscrupulous.

I also contacted the AJPS editors to point out why Enos’ response was unsatisfactory and which additional issues should have been investigated, but they have remained unresponsive in nearly two months.

A full copy of the original complaint submitted to Harvard for investigation in 2018 and swept under the rug by Claudine Gay (who works in the same race and ethnicity politics niche, and whose work has also been under suspicion of fraud for over 20 years) is available for download at this site (this only concerns the AJPS article / Chapter 5 in the CUP book):

https://www.econjobrumors.com/topic/report-on-ryan-enoss-data-fabrication-available-for-download

There is no doubt that both articles need to be retracted.

The AJPS article — and implicitly Chapter 5 of the CUP book — needs to be retracted because it contains results that build on deleted inconvenient observations and data values that simply do not exist in official sources; just compare Enos’ dataset with the official Chicago elections data; these cannot possibly remain in print at the journal and in the book (whether this is the result of fraud or incompetence).

The PNAS article — and implicitly Chapter 6 of the CUP book — needs to be retracted because at least some of those responses are clearly not genuine responses from the experimental subjects that were on those Boston suburban trains (where they were exposed to the “treatment”, i.e. contact with paid actors pretending to be Mexican immigrants). Some of these appear to be filled out by children of the respondents to get the $5 reward cards (in the verbatim comments, some say things like “I’m a child, I don’t have the right to vote”). Some responses are suspicious (the age of the respondent is 1, 2, or 3 years old, and their only comment is something like “great survey” -- that happens despite errors in the survey pointed out by other respondents, such as missing income categories or questions that beg for specific answers ). The now deleted IPs also indicate that respondents that should have been commuting on different trains outside Boston were filling out surveys from the same location: IPs linked to institutions such as Harvard university or Liberty Mutual (an insurance company) in Cambridge, MA. There are two hypotheses here: 1) Enos filled out his own surveys from these IPs, or 2) commuters worked at these places but he changed the treatment assignment (commuting train station) to manipulate results in his preferred direction. Obtaining the raw data used by Enos from the Qualtrics company would answer many of the questions surrounding the irregularities in the PNAS article / CUP book Chapter 6.

I hope this helps. Unfortunately, since both journals are refusing to honor their ethical obligations and perform am adequate investigation, the entire burden of investigating these irregularities now falls on CUP. Otherwise this prestigious press will continue to allow in print findings that are at best the result of monumental incompetence, and at worst the result of an egregious amount of research fraud.

Please let me know if you need any further clarifications or if I can assist in any way with the investigation.

Best regards,

[Name]

_______________________________________________________________________________

AJPS editors: No response yet.

_______________________________________________________________________________

Harvard officials: No response yet.

_______________________________________________________________________________

PNAS response:

Dear [Name],

Following internal review at PNAS it has been determined that no further action is necessary from the journal. We now consider this matter closed.

Kind regards,

Editorial Ethics Manager

Proceedings of the National Academy of Sciences

Pronouns: she, her, hers

_______________________________________________________________________________

CUP response:

It's for those journals to investigate the allegations of data problems

But it would be helpful if the author would comment on how closely our book is related to/based upon these studies. We'd have to tell him we have been contacted re the allegations and believe they are for the journals to investigate, but are keen to understand any overlap with the book to inform how relevant any conclusions of the journals are to us.

[Signature]

Research Ethics and University Collaboration Coordinator

Cambridge University Press & Assessment

_______________________________________________________________________________

I don’t know what the CUP research ethics coordinator will do now that the magnitude of the overlap has been explained, and that the journals won’t honor their obligation to investigate. Normally CUP should launch their own investigation, and if that happens, I will publish an update.

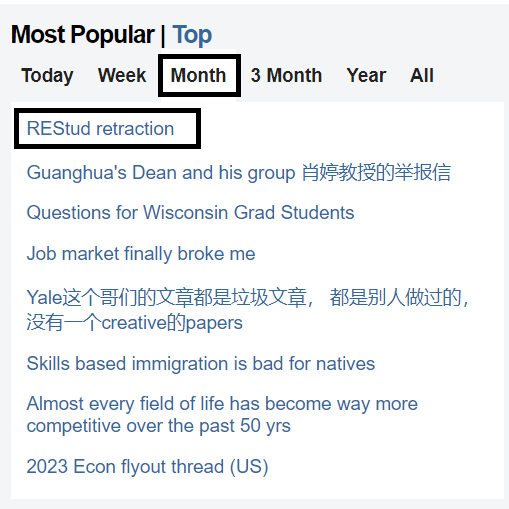

Scandal #2: Retraction at ReStud

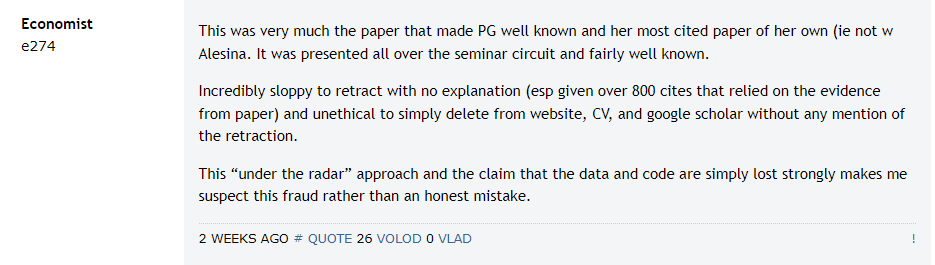

This was the most popular EJMR thread of the last month:

Unlike most scandals discussed on EJMR that are conspicuously ignored, this retraction gained a modicum of traction on #EconTwitter… among white men, at least.

Where are the women speaking up about this retraction by a famous female economist? I couldn’t find any. Do they not care about research misconduct? Are they protecting their own?

The paper in question is Growing up in a Recession, published in the Review of Economic Studies (ReStud), and co-authored by Dr. Paola Giuliano and Dr. Antonio Spilimbergo, two Italians. The former is a professor at UCLA, and the latter is a researcher at the International Monetary Fund.

It is worth noting that Dr. Guiliano almost certainly would not have gotten tenure at UCLA without this paper — ReStud is one of the top 5 journals in the world, and she was already a borderline case *with* this paper on her CV.

So, she got tenure based on a broken & wrong paper. Classic! Perhaps obtaining tenure could have been the incentive to fudge the code/data, or perhaps this was a bona fide coding error. Impossible to say.

Another possible reason to fuge the code/data is… ideological? The authors found that, when you grow up in a recession, you're more likely to become left-wing. When doing the analysis correctly, in fact it turns out you become more right-wing. This opposite ideological effect was found in Revisiting the Effect of Growing Up in a Recession on Attitudes Toward, which was presented at a seminar on January 8th, 2023. The original “Growing up in a Recession” article was then retracted 3 days later, so it’s pretty clear that the “Revisiting” paper was the impetus for a retraction.

The stated reason on the ReStud website for this retraction is:

“The authors and editorial team are retracting this article because the original findings cannot be replicated, likely as a result of an inadvertent coding error. While the original codes and data sets are no longer available, new analysis with a markedly similar data set does not support the original results.”

I wish I could check the code & data to see what this “inadvertent coding error” is… but the code and data has magically disappeared! Slash, it never existed in the first place, despite the fact that ReStud already mandated that code and data be included in submissions when this paper was published back in 2014.

I emailed ReStud to ask why they flagrantly ignored their own data/code disclosure policy, but they did not respond. Likewise, I contacted the authors of this paper to elaborate on the “coding mistake”, but did not receive a response. It seems both the journal and the authors are at fault for some mix of incompetence and/or corruption.

It’s noteworthy that the given reason for the retraction says “the original codes and data sets are no longer available”. This is not true. Each of the 3 datasets in the paper are available:

I don’t understand why they lied about the data being unavailable. The data *is* available but I am guessing their code is so wrong that they re-ran it, and it didn’t work without fudging the code which the opposing team of researchers have found, so they say that they can’t possibly re-run it.

It’s simply not the case that “the original codes and data sets are no longer available.” It’s only the code that is not available — and this is because the author never bothered to submit the code to the journal, the journal never checked that the code was submitted, and the author never saved the code. In other words, the dog ate my code. Very convenient.

I am guessing this is why they gave such a vague explanation... “If they opted for such a vague one, it is because the problems were numerous.”

It is fraud. If it were just a coding error, the code can be corrected and re-run. No big deal. Would justify an errata. The fact that someone is prepared to give up a top 5 publication shows it is fraud. Otherwise, you would fight for it. Correct the code. Give new results.

— Anonymous economist

It’s possible that the code was actually submitted to the journal at some point, I guess, and they scrubbed it from the internet after realizing how bad it was, but I am unable to provide any evidence to support this conspiracy theory.

Here are some EJMR reactions to this paper:

This paper is already retracted, so what more can I ask for?

In a perfect world UCLA would retroactively revoke her tenure, maybe, since she only earned tenure in the first place on the merits of this paper? I don’t know… Maybe that punishment is too heavy-handed, and anyways, it is never going to happen.

It's a good example for rookies. First you cheat, then you get caught ten years later. In the meanwhile you got tenure, full professor, resources, connections+ another free top publication, big salary. Rookies, learn from this!

— Anonymous economist

Finally, I would note that Dr. Giuliano is a coauthor of Yiming Cao, a blatant fraudster whose crimes I describe at length in this article below.

Birds of a feather.

Scandal #3: Something Smells Fishy at the Journal of Accounting and Economics

I was recently pointed towards this "TEMPORARY REMOVAL" from over a year ago.

Dr. Jody Grewal is an Assistant Professor of Accounting at the University of Toronto Mississauga. She obtained her PhD from Harvard Business School.

This TEMPORARY REMOVAL is noteworthy because having an already-published paper be subject to TEMPORARY REMOVAL is extremely unorthodox (have you ever seen this happen before? I haven’t)… and having that “temporary” status linger for more than a year is even more unorthodox. It’s embarrassing that the journal has not yet retracted the paper, fixed the paper, or at least disclosed the reason for a temporary retraction. The fact that it is stuck indefinitely in limbo is… shady.

I reached out to ask about it:

They ignored me.

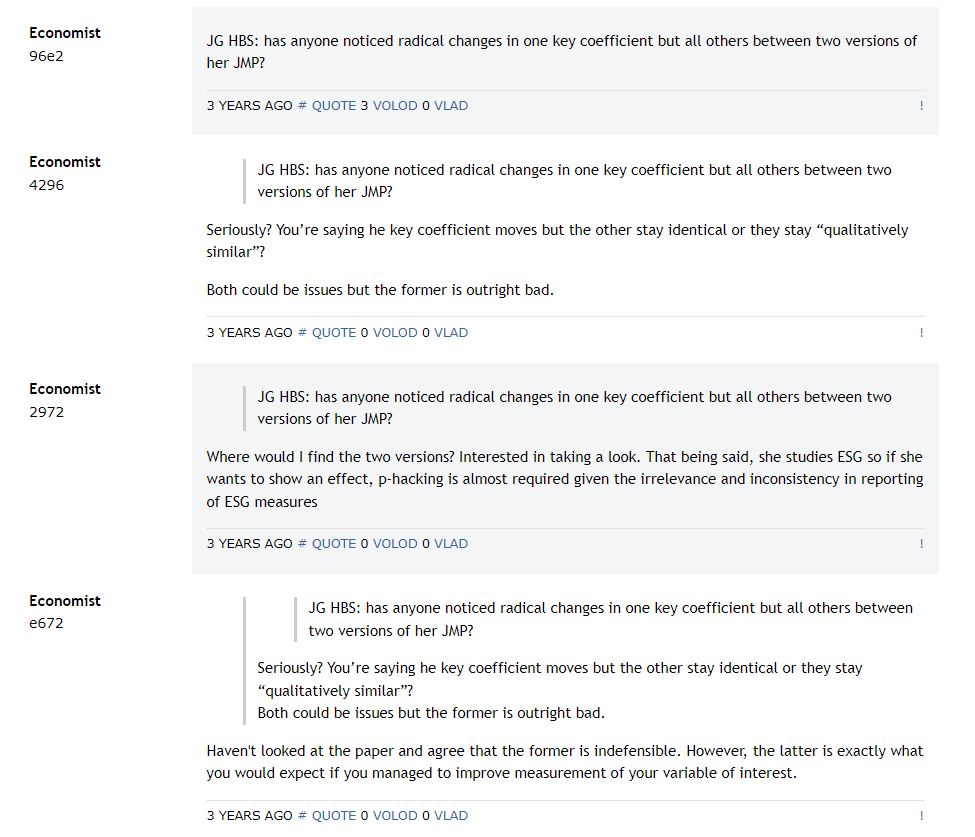

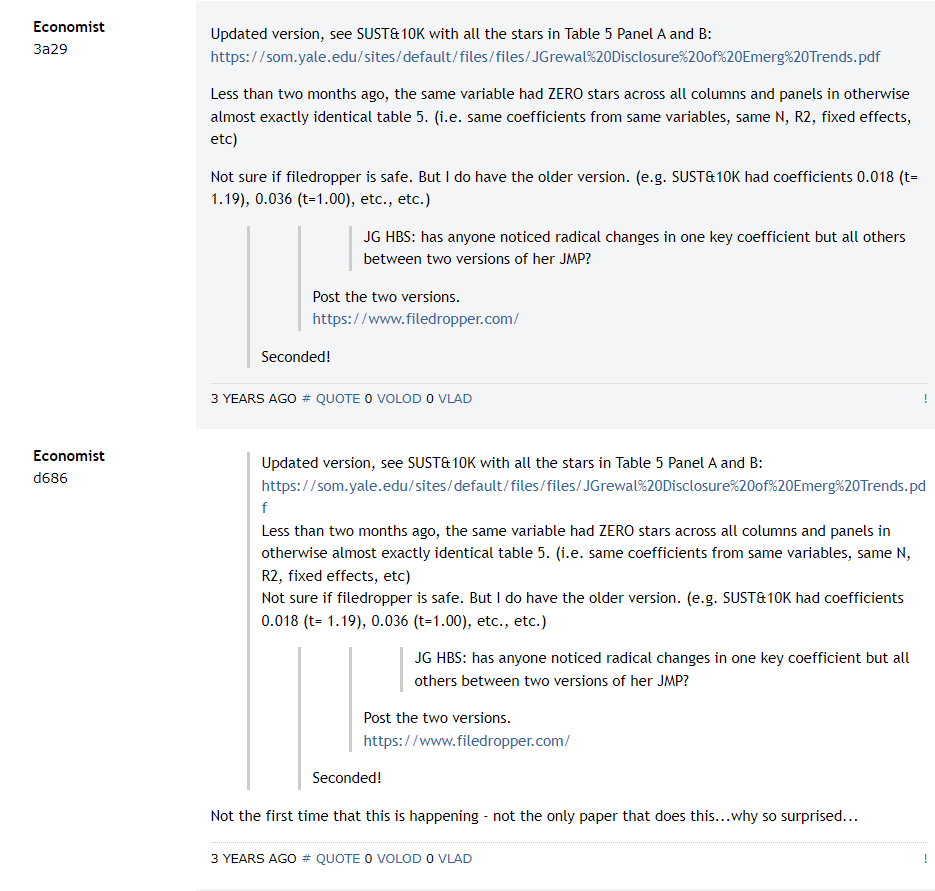

Digging in a 3-year old, 200+ page EJMR thread Accounting Market 2018/19, yields a clue as to what could be wrong with this paper.

It seems this paper went through major changes from the draft to the final version — some coefficients and stars magically appeared. Sadly, I was unable to find both versions of the papers to track how Table 5 Panel A and B changed over time.

The issue could be something else, but if I were a gambling man, I would be betting that Table 5 Panel A and B is the issue.

I will provide an update whenever this paper leaves TEMPORARY RETRACTION limbo.

Scandal #4: Temperatures Rise in Finance Academia

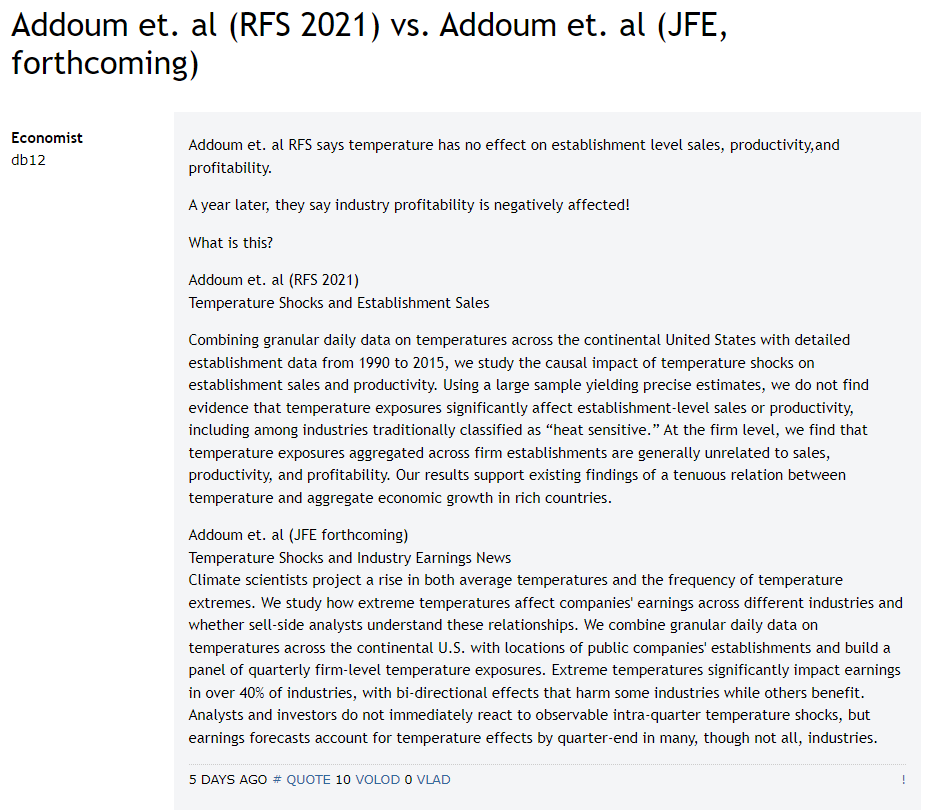

This is another red flag noticed by EJMR in this thread:

What we have here is the exact same team of 3 researchers, writing two papers about the same subject, in the best finance journals in the world, with opposite results.

I reached out to the authors:

Dear Dr. Ortiz-Bobea, Dr. Ng, & Dr. Addoum,

I am an investigative journalist who writes about academia. I noticed this thread on a forum, discussing two of your papers.

RFS (2020) https://academic.oup.com/rfs/article-abstract/33/3/1331/5735303

JFE (forthcoming) https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3480695

The first paper concludes that:

"We find that the effects of temperature shocks are economically small and statistically insignificant,including among industries identified as heat sensitive in prior literature."

The second paper concludes that:

"Extreme temperatures significantly impact earnings."

Does this mean that the first paper is... wrong? Is the newer paper not a direct refutation of your previous findings? If not, why?

I know that the difference is that the second paper breaks the effect of temperature down bi-directionally ("For example, relatively cold winter weather may benefit ski resorts and nearby hotels and restaurants. In contrast, hotels and restaurants may be hurt by cold extremes in the summer months"), but the first paper already concluded that heat-sensitive industries (which presumably refers to hotels and restaurants) are not impacted.

If you could help me reconcile this, to find what is driving the contradictory results, or otherwise point me in the right direction, it would be greatly appreciated.

Thanks,

Christopher Brunet

To which they responded:

Thank you for your interest in our research. As you point out, the difference between the papers is that the first paper focuses on population average effects across industries. The second paper breaks down the effects of extreme temperatures not just by industry, but by quarter for each industry.

We motivate this in the second paper in the following passage: “While Addoum, Ng, and Ortiz-Bobea (2020) find no population-level effects associated with extreme temperatures, certain sectors of the economy may still exhibit sensitivity to extreme temperatures and such sensitivities may be pronounced only during certain months or seasons. Furthermore, the effects associated with extreme temperatures may be bi-directional.”

Thus, while we find no significant effects when examining the entire population in the first paper, we do we find evidence of temperature sensitivities at specific times for specific industries in the second paper. The fact that these effects are bi-directional is the reason why both results co-exist (i.e., they “cancel” or average out).

Regarding the heat-sensitive industries, these are defined using the approach of Graff-Zivin and Neidell (2014), and include the following: agriculture; forestry, fishing, and hunting; mining; construction; manufacturing; and transportation and utilities (see footnote 4 in the first paper). Thus, hotels and restaurants are not included in this group.

I hope this helps clear things up. Thanks again for your interest in our work.

I leave it up to the reader to interpret this response as they wish.

My interpretation is that this basically confirms the papers are p-hacked. They chose a new configuration and cherrypicked new industries until they got new results. Oh, and this effect only holds when you set up your data quarter-by-quarter, not year-by-year. Got it.

Is this a “scandal”? Probably not. It’s really just symptomatic of the state of academic finance research — most of the profession’s papers are p-hacked pablum. Don’t hate the player, hate the game. The whole field of academic finance just kind of… silly. Cutesy p-hacked nonsense to tell a nice story. Years of research by America’s brightest minds, all to find that some hotels do better when it is hot outside, and some hotels do better when it is cold outside, sometimes, only if you rig the numbers in just the right way.

Honestly these papers strike me as if they were written by undergrads. “Hey! Let’s regress profit on… temperature! That should tell a cool story!”

I guess what I am saying is that society would be better served if these 3 genius Cornell professors were ditch-diggers. At least then we would have some holes in the ground to show for it.

Scandal #5: Toxic French Business School Cleans House

I have never heard of “Audencia Business SchooI”, but apparently it is one of the best business schools in France. As per Wikipedia, it is one of the oldest business schools in the world, established in 1900, and it is considered one of the 10 best business schools in France.

Following a series of articles by French outlet “Mediacités” slamming the "brutal," “sexist” and "toxic" management of the school's management…

Makram Chemangui, deputy director general of the school, has resigned.

I am not sure what exactly made this business school so brutal, toxic and sexist, but I am sure this resignation is big news in the European business school community.

Scandal #6: Update at the Journal of Accounting Research

Last year I posted these 2 updates:

Looking back on these articles, I have some regrets.

First, my language was too direct and inflammatory, I should have hedged it more. When I write stuff like “FRAUD DEFINITELY OCCURRED! LYING SCUMBAGS!”, if I were writing this article today, I would have couched my language and written something more reserved like “Evidence strongly points towards the fact that fraud likely occurred.”

Second, the email inquiry I sent to the authors/journals were needlessly pugilistic. I blatantly mocked them and picked a fight with them, trying to goad them into fighting back. If I were making the same inquiry today, I would send a polite, professional, direct email. I’ve learned there is really no upside to picking a fight with professors over email. Much better to be fair and evenhanded; staying detached and arms-length, rather than getting personally animated.

I take heart, at least, at the fact that I cringe at how I treated these situations as a new journalist means I have grown/evolved since then, and I am better off for it. Live and learn.

That being said… I was still right about the massive fraud in this case. Research misconduct occurred, and it was covered up. I should simply have been more dispassionate about pointing it out.

I have an update!

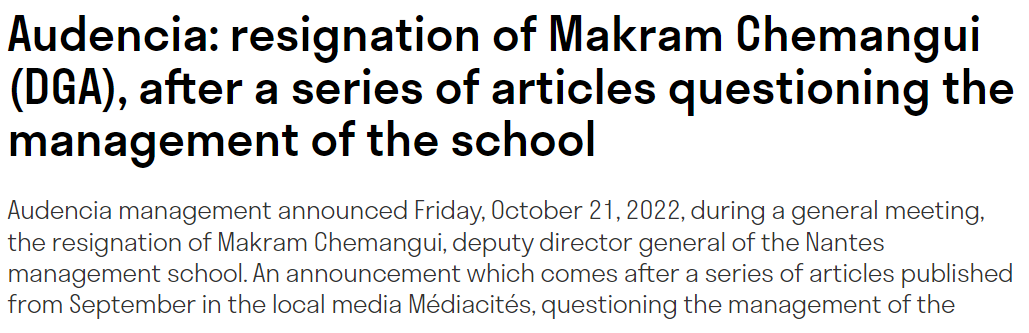

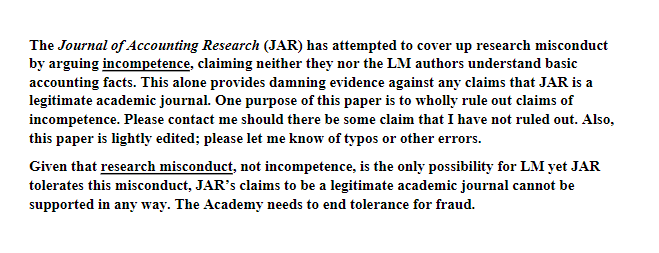

The original whistleblower, from the first article, has just released a working paper outlining the fraud in great detail.

This paper has been criticized in an 80-page EJMR thread for being too emotional — not written in proper/formal academic language — but nobody has yet been able to find any factual errors in it.

The whistleblower has now offered a $1,000 reward for anyone who can find any factual errors in the report. If the authors who participated in this fraud want to prove their innocence, they could simply point out why the whistleblower report is wrong, and collect their $1,000.

Should be easy, right?

Can you imagine what soul sucking despair you would be feeling if you chose the PhD path and you had to look on and even participate in this terminal decline of academia? I feel for those anons...

“ The 45-year olds in this poll will probably feel old being lumped in with 60-year olds, but the poll has a maximum of 5 options, so I constructed the brackets as best I can.”

There’s old, then there’s wisdom. 63 here. Clicked the 4th choice, but just had to comment…